Recent events have really thrown light onto something I’ve been feeling for a while now: we need better public information about the state of the secure internet. We need to be able to answer questions like:

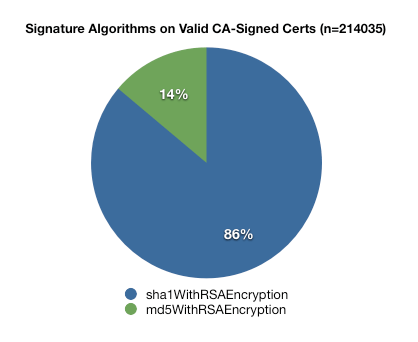

- What proportion of CA-signed certs are using MD5 signatures?

- What key lengths are being used, with which algorithms?

- Who is issuing which kinds of certificates?

So I decided to go get some of that information, so that I could give it to all of you wonderful people.

What I Did

I put together some python to crawl a list of sites and record the details of their response to an SSL handshake into an sqlite3 database. I don’t know python, and my SQL is extremely rusty, but it works. The code is here, take it and make it better! Pay particular attention to the TODO list!

As for a list, I used Alexa’s list of the top 1,000,000 sites, which they quite helpfully make available for free download. Of course we can have all kinds of fun debating whether this is the right list to use, it’s obviously going to have some skews. Anyone with a similar list can either hook me up and I’ll give it a whirl, or download the code and run it themselves. This list did get me 382,860 certificates from the public internet though, so that’s pretty okay.

What I Can Do With It

For each host in the list, I currently record:

- Whether the connection succeeded or not, and if not, why

- The verification result, using the Mozilla list of CAs

- Various and sundry connection/certificate details (subject, issuer, cipher, keylength)

- The PEM-encoded end-entity cert, for post-analysis

What that means is that I can answer some reasonably relevant questions. For instance, during the recent excitement over MD5 weaknesses, we have been having conversations about retiring MD5 as a supported signature algorithm as, I’m certain, have the other browsers. Making that decision in an informed way requires us to understand how much of the internet still relies on MD5, though, and when those certificates will expire.

What Can You Do With It?

There’s almost 400,000 certificates, all told, in a big SQL-queryable database. Want to see a total breakdown of certs by issuer? By verification code? Want to see the distribution of key lengths? Or cipher suites?

Maybe you write software that processes certificates – want 400,000 real world examples to test against? As far as I know, this kind of data hasn’t been available before without paying for it, so I’m actually really interested to know what you can do with it.

How Much of the Internet is this?

Good question! Other estimates I’ve seen for the total population of servers responding to SSL hails out there is about 1-4M. Based on that, I’d say this is probably about 10-20% of the secure internet, but I wouldn’t try to use this data to make magnitude assessments; it’s better suited to proportions and comparative work, really. If my estimates are close, then this is a big enough chunk of the total population to produce pretty good data. Remember, it will exhibit the skews you’d expect from sampling the more popular sites, but that will also serve to weight it towards the certs people are more likely to see. If that’s not the bias you want, use a different list!

The Goods

- The database (gzip’d, sqlite3 format, 367MB) for my Jan 15 crawl.

- The code I used to gather it. (It needs love, love it.)

- If you want to play with something a little less gargantuan, I’ve also put up a trimmed version with just the top 10,000 (gzip’d, 3MB).

If you find good stuff in here, I hope you’ll leave a comment letting me know. If you can’t access the database or download the file and want me to run a query against it, let me know. I only connect to each host once, and all I do is open an SSL connection, so the load on the servers is non-existent. The load on my machine while running it though, can be heavy. To keep the interruption to a minimum, I use conservative settings which make a crawl take about 40 hours, so until I have it running in parallel on a rack of excitingly fast machines, please understand that requests for re-crawls will take a while.

This is very interesting! Would it be possible to convert the DB also to a dump for MySQL? And perhaps could you provide a small snippet from the DB, so that not the full file has to get downloaded (for testing purpose)?

If there is a way to add sites to your Alexa list randomly I could contribute some harder to find sites, which could give some additional insight.

@Eddy – glad to hear you find it interesting! I’ve added a smaller version with just the first 10,000 hosts (1%), for people who want to play before they consume the big one. I should be able to produce a MySQL-compatible dump, but it will also be… large. 🙂

This is really interesting.

You might also be interested in using things like:

– The RSS feeds for Alexa’s top 100 per-country (for smaller countries, these sites might not be in the top 1 mil) – could be merged with the main list, or perhaps taken on its own this might get interesting info regarding to compare countries

Quantcast data (you can also download data, iirc also the top 1 million – there’s a restriction on commercial usage)

I’m sure there are other top lists of sites (Hitwise etc?)

This is very much what was needed, and what I needed for some analysis. Thank you.

You have yet again validated your digital rock star status Mr. Nightingale. Nicely done!

“…These are nice. What are these?…”

So I said, I know rockstars, and He was a rockstar. And this is very peachy; keen– to be not ill of humours, I beg you are wont, that I may spill the sort of bean, as in what– I think– you mean.Forward-looking, face-o-mine, to face this code at about 9:00.

Then on, to ol’ Fort York,where brewers brood, ’round Summertime that latitude: we Yanks are sure to stagger!not unlike, it’s truedid stumble, I ontokind sir, of dripping brains

did waste it not upon my shoes!

I guar-on-tea!

Toot-a-loo, Mr Miss (is agua?)(1)

(1)less H2O, for moron tear-E-oh’s, like cheerios… yum yums!